Building a data center is a major project that involves extensive planning and specialist knowledge to create a facility capable of housing critical IT infrastructure. The venture begins with a detailed planning and pre-design phase that includes site selection, permitting, and understanding the specific needs of the organization it serves. A well-designed data center ensures high availability, efficient energy usage, and flexibility for the future growth and integration of new technologies.

As the design phase progresses, data center architects and engineers focus on key infrastructure elements such as power supply, cooling systems, and networking capabilities. These components are essential in constructing a reliable and scalable data center. The physical construction of the data center requires a synchronized effort to install the infrastructure that supports its operations. Once built, data centers require continuous monitoring and management to ensure they operate within the desired parameters and adhere to sustainable practices.

Key Takeaways

- Data centers require meticulous planning and design to support IT infrastructure needs.

- Key infrastructure elements like power and cooling systems are central to data center design.

- Ongoing management and sustainability are critical for data center operations post-construction.

Planning and Pre-Design

The initial phase of building a data center focuses on aligning with business objectives, selecting an optimal site, outlining the financial scope, and adhering to industry compliance and standards. This stage lays the groundwork for a facility that supports digital transformation while ensuring scalability, reliability, and redundancy.

Business Objectives and Strategy

A comprehensive understanding of the business’s short and long-term objectives is crucial. The data center should be planned with scalability in mind to accommodate future expansion and digital transformation initiatives. The decision to build vs buy must align with business strategy, taking into account reliability and operational uptime as specified by the Uptime Institute’s tier classification.

Site Selection

Selecting a site requires evaluating factors like geographical location, susceptibility to natural disasters, connectivity options, and energy availability. The site should allow for adequate redundancy in power and data infrastructure, ensuring continuous operation.

Budget Considerations

Budgeting should cover both the upfront construction costs and ongoing operational expenses. Build vs buy decisions have significant financial implications, so accurate and detailed financial planning is essential for managing the total cost of ownership.

Compliance and Standards

The design must comply with industry standards like EN 50600, which covers the characteristics and concepts for data centers. Compliance ensures that the data center meets the necessary requirements for reliability, scalability, and redundancy to support the company’s uptime and data security needs.

Designing Data Centers

The design of data centers is a complex endeavor that merges architectural finesse with technological proficiency. Successful data center design balances functionality with efficiency, taking into account future scalability and adherence to standards.

Architectural Design

Data centers necessitate a robust architectural design that embodies not only the physical structure but also aligns with strategic operational objectives. Architects often collaborate with engineering teams to integrate a layout that supports the weight of heavy IT equipment and promotes an efficient workflow. They must ensure compliance with codes and integrate sustainable building practices. Companies like Amazon Web Services and Equinox may design their facilities with specific operational profiles in mind, which directly influences architectural considerations.

Key Architectural Considerations:

- Structural load: Bearing the weight of IT equipment and energy systems.

- Space planning: Optimizing layout for equipment, maintenance, and potential expansion.

Mechanical, Electrical, and Plumbing (MEP)

MEP design in data centers is fundamental for maintaining continuous operations. The system encompasses power distribution, cooling mechanisms, and plumbing infrastructure essential for heat management and emergency situations. MEP engineering must adhere to codes and standards set by bodies like ASHRAE and ensure operations like Google Cloud’s are uninterrupted.

Critical MEP Components:

- Electrical systems (redundancy levels, UPS)

- Cooling systems (CRAC units, containment)

- Plumbing (fire suppression, leak detection)

Technical Specifications

The technical spades of a data center’s design hinge on specific standards and practices. Data center design standards from organizations such as Vertiv influence choices for IT infrastructure, aiming for optimal performance and reliability. Judicious planning of network layout and storage configurations ensures that data center operations meet client expectations with robust uptime and service levels.

Technical Specifications:

- Networking: Structured cabling systems, switch configurations.

- Storage: Determination of capacity, type (e.g., SSD, HDD), and redundancy.

Key Infrastructure Elements

The construction of a data center revolves around the integration of critical infrastructure elements, each designed to ensure optimal performance, reliability, and security.

Power Infrastructure

Power is the lifeblood of any data center, ensuring that all systems operate without interruption. The power infrastructure is composed of a primary power supply that is supported by backup power systems, such as uninterruptible power supplies (UPS) and generators, which provide emergency power in the event of outages. It’s vital to balance power consumption with capacity to achieve efficiency and prevent overload.

- Backup Power:

- UPS (Uninterruptible Power Supplies)

- Diesel Generators

Cooling Infrastructure

Efficient cooling systems are crucial to maintain the proper temperature and ventilation within a data center. The cooling infrastructure deals with the excess heat generated by IT equipment, using a variety of methods like computer room air conditioners (CRACs) and advanced liquid cooling solutions. The aim is to optimize the cooling capacity to match the heat load, thus maintaining the necessary environmental conditions for equipment longevity.

- Cooling Systems:

- CRAC Units

- Chilled Water Systems

- Hot/Cold Aisle Containment

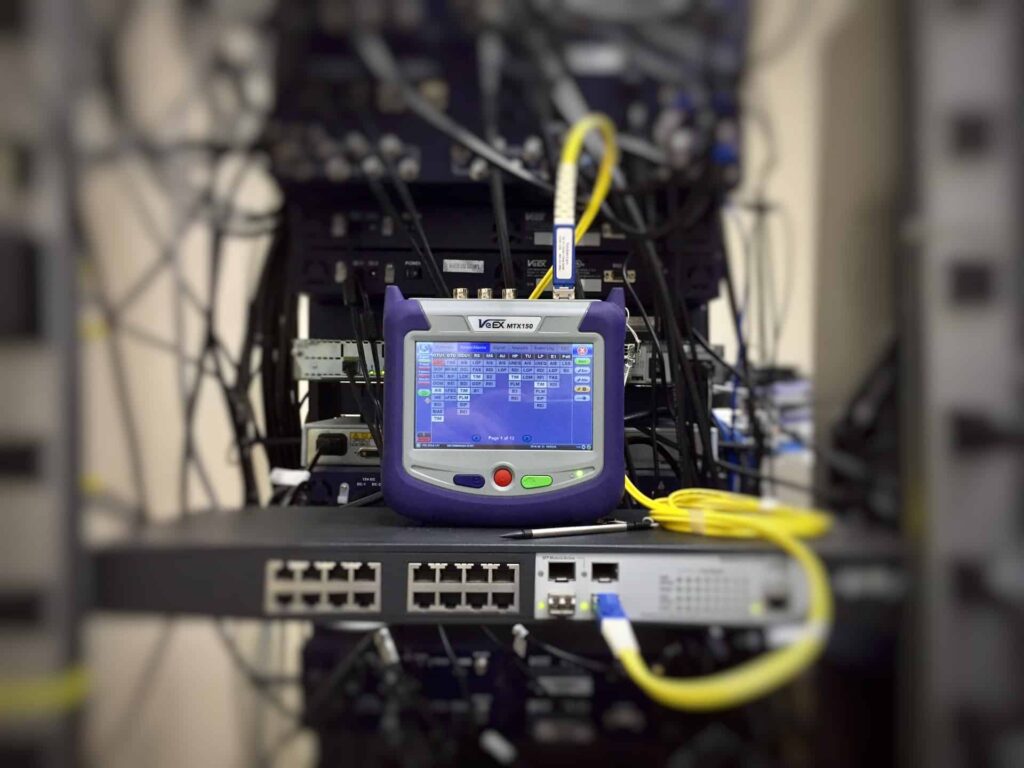

Networking Infrastructure

Networking is the backbone for data exchange within and outside the data center. The networking infrastructure encompasses a range of hardware, such as routers, switches, and cabling, to provide robust connectivity and bandwidth. Careful design is required to ensure redundancy and prevent single points of failure, facilitating uninterrupted data flow.

- Networking Components:

- High-speed Cabling

- Redundant Network Switches

Security and Safety Systems

Security encompasses both physical security measures and cybersecurity protocols to safeguard the data center. Surveillance, biometric access controls, and intrusion detection systems are employed to protect against unauthorized access. Fire safety is addressed with advanced suppression systems and regular inspections to ensure compliance with safety standards.

- Physical Security:

- Surveillance Cameras

- Access Control Systems

- Fire Safety:

- Early Smoke Detection

- Fire Suppression Systems

The effective management of these key elements is instrumental for the data center to operate smoothly and efficiently, underscoring the facility’s resilience and dependability.

Building Data Centers

Constructing a data center requires detailed planning, a skilled team for project management, and adept handling of on-site challenges. After the physical structure is built, rigorous testing and commissioning ensure the data center’s operations meet the required standards.

Construction Management

Effective project management is central to constructing a data center. Construction companies must appoint experienced managers who oversee every aspect of the build, from initial design to material procurement and labor coordination. A project manager ensures that the construction schedule is adhered to and that all deliverables align with the client’s specifications. They also mitigate risks and adjust strategies in response to unforeseen issues.

On-Site Challenges and Solutions

Data center construction can present unique on-site challenges. Structural designs must accommodate extensive cabling, sophisticated power systems, and cooling equipment. Unexpected ground conditions or weather-related delays can disrupt progress. Solutions include pre-emptive geological surveys and incorporating flexible design elements that can adapt to variable conditions. Fault-tolerant power and cooling systems are also essential to ensure continuous operation post-construction.

Testing and Commissioning

Before a data center becomes operational, a phase known as testing and commissioning is critical. This phase verifies that the infrastructure performs according to the design specifications. Tests on electrical systems, backups, cooling equipment, and security features are conducted. It necessitates a collaborative effort from engineers, construction teams, and the end-user to certify that the facility is ready for active service.

Operational Considerations

In managing a data center, meticulous attention to infrastructure, robust monitoring systems, and rigorous maintenance protocols are paramount for seamless operation.

Infrastructure Management

Data center infrastructure management (DCIM) involves an integration of technology and processes to oversee the physical assets and resources. Power requirements are a core component of DCIM, ensuring not only sufficient supply but also energy efficiency. Strategies for effective management include:

- Temperature and Humidity Controls: Precise regulation avoids equipment overheating and moisture-related damage.

- Disaster Recovery Planning: Facilities must establish resilient systems to maintain operations amid crises.

Data Center Monitoring

Continuous monitoring is crucial for operational stability. This includes:

- Real-Time Analytics: Detection of irregularities for immediate resolution.

- Environment Tracking: Surveillance of temperature and humidity to within set thresholds is critical.

Maintenance and Servicing

Regular servicing ensures that all systems operate within their designed specifications. Key aspects are:

- Scheduled Inspections: They preempt equipment failures through early identification of potential issues.

- Collaboration: Teams work cohesively, sharing information enabled by DCIM tools for coordinated maintenance efforts.

Sustainable Practices

In the context of data centers, sustainability now extends beyond mere energy savings to encompass broader considerations under the environmental, social, and governance (ESG) umbrella. Fostering sustainable practices involves meticulous strategies in two critical areas: energy efficiency and comprehensive adherence to ESG standards.

Energy Efficiency

Energy efficiency in data centers pivots around the optimization of power usage, a metric often gauged by Power Usage Effectiveness (PUE). A lower PUE indicates a more energy-efficient data center. To achieve this, data centers may:

- Audit: Conduct regular energy audits to establish baseline consumption.

- Cooling: Optimize cooling systems, which account for a significant portion of energy use.

- Equipment: Retrofit or replace older equipment with more energy-efficient models.

- Virtualization: Increase the use of virtualization technologies to run multiple virtual servers on single physical machines, reducing overall energy consumption.

| Strategy | Implementation Example | Outcome |

|---|---|---|

| Energy Audits | Evaluating existing infrastructure | Identifying inefficiency hotspots |

| Cooling Optimization | Using cold aisle containment | Reducing unnecessary cooling expenditure |

| Equipment Upgrade | Investing in Energy Star-rated devices | Lowering electricity usage |

| Virtualization | Leveraging cloud computing resources | Decreasing server count and associated energy demand |

Environmental, Social, and Governance (ESG)

ESG criteria shape the social and ethical considerations alongside the environmental impact of data centers. These standards can be operationalized through:

- LEED Certification: Data centers can pursue Leadership in Energy and Environmental Design (LEED) certification to validate their sustainability achievements.

- Carbon Footprint: Methods to reduce greenhouse gas emissions include sourcing greener energy and improving overall energy efficiency.

- Resource Management: Implementing effective e-waste management protocols ensures responsible consumption and waste handling.

| ESG Component | Consideration | Approach |

|---|---|---|

| Environmental | Minimize emissions | Utilize green energy sources |

| Social | Community impact | Engage in fair labor practices |

| Governance | Corporate responsibility | Adopt transparent reporting measures |

Through judicious planning around these areas, data centers can lessen their environmental impact while aligning with socially responsible and ethically sound governance practices.

Data Center Types and Models

The landscape of data centers has evolved, presenting various models each designed to cater to distinct organizational needs in terms of control, scalability, and management. Here, we explore the three primary types.

Colocation Data Centers

Colocation data centers offer businesses space to rent for their hardware. Colocation provides the physical security, power, cooling, and networking facilities required for server storage while allowing customers to manage their own data servers. This environment is ideal for organizations looking for a hybrid cloud solution without the capital expense of building their own facility.

Enterprise Data Centers

An enterprise data center is built, owned, and operated by the company it supports and is typically located on the company’s premises. These data centers are designed for exclusive use by a single organization, offering complete control over the data infrastructure. They are often employed by large-scale enterprises with significant data processing and storage demands.

Cloud and Edge Data Centers

Cloud data centers are the backbone of cloud computing services, offering businesses and individuals virtual servers to store and manage data. These data centers can provide on-demand resources, scaling with the needs of the company using public, private, or managed data center options. Edge data centers, on the other hand, are small-scale facilities located close to the edge of the network, intended to deliver low-latency processing and storage solutions to support real-time data analytics near the user base.

Risk Management

Effective risk management is essential for ensuring the continuity of operations in data centers. This section will delve into the specifics of planning for uptime and outages, delineating disaster recovery strategies, and implementing fire prevention and suppression measures.

Uptime and Outage Planning

Data centers strive to achieve the highest possible uptime. They must have a comprehensive plan that includes redundant systems and real-time monitoring to anticipate and prevent outages. Regularly updated and tested backup protocols are crucial for rapid recovery in the event of an incident. Strategies may include:

- Redundancy: Utilize N+1 or 2N redundancy for critical systems.

- Monitoring: Deploy advanced monitoring tools to detect anomalies quickly.

Disaster Recovery Strategies

Disaster recovery is a key component of business continuity planning in data centers. The focus here is on creating resilient infrastructures that can withstand various disaster scenarios, including natural and man-made events. Elements of a robust strategy typically involve:

- Geographical considerations: Choose data center locations with lower risks of natural disasters.

- Data replication: Implement data replication across geographically diverse sites.

Fire Prevention and Suppression

Fire safety in data centers is addressed through a combination of preventative measures and advanced suppression systems. They are designed to quickly and efficiently extinguish fires without damaging equipment. Components of fire safety include:

- Detection: Install high-sensitivity smoke detectors for early warning.

- Suppression: Use gas-based fire suppression systems, which are effective and safe for electronic equipment.

Implementing these measures diligently helps safeguard the data center against risks, maintaining operational integrity and protecting clients’ critical assets.

Integration and Scalability

When constructing a data center, careful consideration must be given to both integration of systems and the ability to scale. Effective integration ensures harmonious operation between components, while scalability addresses the data center’s future growth in terms of capacity, physical footprint, and technology adaptation.

Capacity and Expansion Planning

Capacity planning involves a strategic approach to predicting future resource requirements to handle increased data loads. It is essential for a data center to anticipate not only the day-one demands but also to implement a framework that supports growth. This entails understanding the workload requirements and anticipating scale based on real data — it could include metrics such as server utilization rates and network traffic patterns. Physical footprint is an important factor in capacity planning, referring to the amount of space available for scaling infrastructure; it determines how the data center will physically expand as demand grows.

- Key Aspects of Capacity Planning:

- Assessing current and future workloads

- Estimating the rate of technological changes

- Evaluating space and power requirements for expansion

Modular and Scalable Solutions

Modular and scalable solutions provide the flexibility to expand incrementally, aligning with demand while controlling costs. By using modular designs, a data center can add or reconfigure its resources — such as power, cooling, or computing nodes — more efficiently. Scalability is not solely about scaling up; it also includes the ability to scale out or even scale down, ensuring that resources are optimized as per the changing needs. A scalable solution inherently accommodates increases in:

- Server Capacity: The ability to accommodate more powerful or additional servers as the workload increases.

- Storage: The potential to add more storage resources without disrupting existing operations.

- Networking: Upgrading network infrastructure to support higher data throughputs with minimal latency.

In executing modular and scalable strategies, data centers become resilient to workload fluctuations and remain prepared for future technology implementations without overhauling the core infrastructure.

Technology and Innovation

The landscape of data center construction is constantly evolving with new technologies aimed at enhancing efficiency, sustainability, and scalability. These innovations are crucial in addressing the increasing demand for data processing and storage.

Emerging Trends

Liquid Cooling: In the pursuit of energy efficiency and higher power densities, liquid cooling has emerged as a leading trend. Unlike traditional air cooling, liquid cooling leverages conductive properties of fluids to remove heat from IT equipment more effectively. Data centers are increasingly adopting this technology to cool their servers more efficiently, as it supports higher computing loads while potentially reducing cooling energy costs.

- Benefits of Liquid Cooling:

- Greater energy efficiency compared to air cooling

- Increased capacity for computing in megawatts

- Enhanced server performance due to lower operating temperatures

Sustainable Design: Sustainability considerations are now at the forefront of data center design. Operators not only strive to reduce carbon footprints but also seek to integrate their facilities with local communities. Renewable energy sources, energy-efficient building materials, and waste reduction strategies are employed to create a more sustainable data center infrastructure.

Advancements in Data Center Technologies

Integration of AI and ML: Data centers are incorporating Artificial Intelligence (AI) and Machine Learning (ML) to optimize operations. These technologies can predict IT equipment failures, streamline cooling systems, and manage workloads efficiently, resulting in decreased downtime and improved resource allocation.

- AI and ML capabilities:

- Predictive maintenance for servers and IT equipment

- Intelligent cooling system management

- Automated workload distribution for optimized resource usage

Innovative IT Equipment: As the backbone of data center operations, servers, switches, and routers are receiving technological upgrades. These advancements lead to faster data processing and more reliable connectivity. Next-generation infrastructure is designed to handle the massive influx of data, supporting the growth of the Internet of Things (IoT) and edge computing requirements.

- Key features of modern IT equipment:

- Higher throughput and processing power

- Enhanced security features for data protection

- Energy-efficient designs for reduced power consumption

The application of these technologies and innovative strategies is integral to the progress of data center construction and operation, ensuring that the facilities are prepared to meet the demands of modern computing while mitigating environmental impact.

Real World Applications

In the context of data center construction and operation, real-world applications offer invaluable insights into contemporary methodologies and the evolution of technological infrastructures.

Case Studies

Facebook has been at the forefront of innovative data center design, with a focus on energy efficiency and sustainability. They have implemented advanced cooling systems that consume 80% less energy than traditional data centers. Facebook’s data centers also utilize 100% renewable energy, setting a benchmark for the industry.

Microsoft, another industry leader, has explored underwater data centers to achieve energy efficiency and address cooling challenges. Project Natick showcased a two-year deployment of a submarine-like data center, capitalizing on seabed temperatures to cool servers and, thus, reduce environmental impact while maintaining operational reliability and speed. This case study exemplified how future data centers might leverage natural cooling sources to optimize performance.

By examining specific projects undertaken by these tech giants, one can better understand the practical realities of building and managing cutting-edge data centers catering to increasing workloads and complexity.

Frequently Asked Questions

In the planning and construction of a data center, several common questions arise regarding its components, design principles, investment, setup requirements, operational efficiency, and business models. Addressing these queries is crucial in building a state-of-the-art facility that meets the technological and business needs.

What are the critical components of a data center?

The key components of a data center include physical infrastructure like space for IT equipment, power systems for uninterrupted electricity supply, cooling systems to manage heat output, network infrastructure for connectivity, security apparatus to safeguard data, and management software for monitoring and controlling the data center environment.

What are the standard design principles to consider when constructing a data center?

When constructing a data center, design principles revolve around reliability, scalability, efficiency, and security. It is essential to adhere to industry standards and best practices to achieve redundancy, reduce downtime, and ensure a safe and secure environment for data handling.

How much financial investment is typically needed to build a data center?

The financial investment for building a data center can range widely depending on scale, location, technology, and infrastructure requirements. Smaller facilities may need millions of dollars, whereas large-scale projects might require investments of several hundreds of millions to over a billion dollars.

What criteria define the setup requirements for a new data center?

Criteria for setting up a new data center include site selection based on risk assessment, access to adequate power supply, connectivity options, environmental considerations, and compliance with legal and regulatory standards. These factors dictate the physical and technical specifications of the facility.

How can a robust data center design architecture enhance operational efficiency?

A robust data center design ensures high availability and minimizes risks of downtime. Properly planned layout and infrastructure can lead to operational efficiencies through better airflow management, cost-effective power usage, and scalability that accommodates future growth without excessive reconfiguration.

What business models are common for operating data centers and how do they impact profitability?

Common business models for data centers include wholesale, retail colocation, and managed services. These models differ in terms of capital expenditure, operational control, and potential revenue streams. The chosen model impacts profitability by dictating the cost structure and customer engagement strategy.

Last Updated on February 12, 2024 by Josh Mahan

![Best data center racks Best data center racks [buyer’s guide]](https://cc-techgroup.com/wp-content/uploads/2021/08/best-data-center-racks-1024x576.jpg)